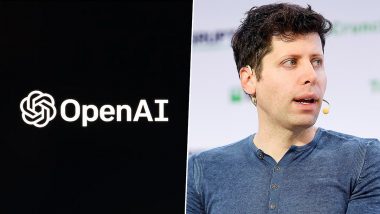

San Francisco, May 28: ChatGPT maker OpenAI Board has formed a Safety and Security Committee led by directors Sam Altman (CEO), Bret Taylor (Chair), Adam D’Angelo, and Nicole Seligman, the company said on Tuesday. According to the AI startup, this committee will be responsible for making suggestions to the full Board on critical safety and security decisions for the company’s projects and operations.

"OpenAI has recently begun training its next frontier model and we anticipate the resulting systems to bring us to the next level of capabilities on our path to AGI," OpenAI said in a blogpost. "While we are proud to build and release models that are industry-leading on both capabilities and safety, we welcome a robust debate at this important moment," it added. OpenAI Begins Testing Its Next Frontier Model Expecting To Bring New Capabilities on Path to AGI, Forms Safety and Security Committee for Company's Projects and Operations.

The first task of this committee will be to evaluate and further develop OpenAI’s processes and safeguards over the next 90 days. After 90 days, the committee will share its suggestions with the full Board. "Following the full Board’s review, OpenAI will publicly share an update on adopted recommendations in a manner that is consistent with safety and security," the company mentioned. DoT Imposes Penalty on Airtel for Violating Subscriber Verification Norms in Punjab.

In addition, the ChatGPT maker said that OpenAI technical and policy experts Aleksander Madry (Head of Preparedness), Lilian Weng (Head of Safety Systems), John Schulman (Head of Alignment Science), Matt Knight (Head of Security), and Jakub Pachocki (Chief Scientist) will also be on the committee.

(The above story first appeared on LatestLY on May 28, 2024 06:22 PM IST. For more news and updates on politics, world, sports, entertainment and lifestyle, log on to our website latestly.com).

Quickly

Quickly