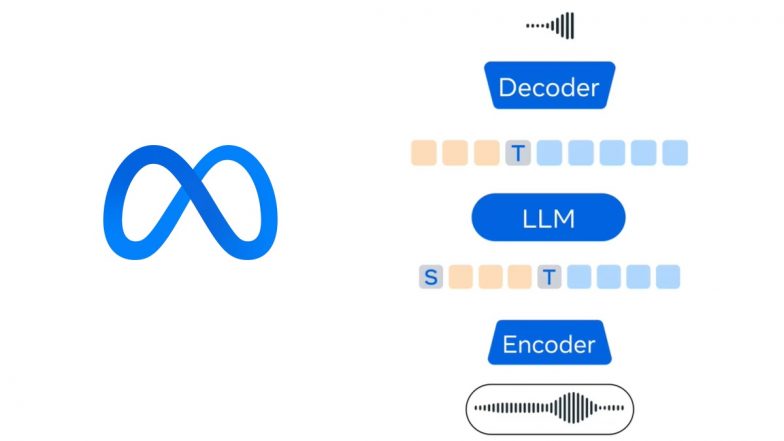

Mark Zuckeberg-run Meta released its first open-source multimodal language model called "Meta Spirit LM". The model excels at mixing text and speech and overcomes the limitations of previous-generation models to offer more natural-sounding speech. The company said many existing AI voice experiences used ASR techniques for speech processing before they synthesized with an LLM to generate the text. However, this compromised the expressive aspects of speech. Meta said its Spirit LM models could overcome these limitations for inputs and outputs to produce more natural speeches and learn new tasks across ASR, TTS, and speech classification. Instagram New Feature Update: Meta-Owned Platform Introduces New Safety Feature for Teenagers, Restricts From Taking Screenshots of These Images and Videos; Check Details.

Meta Launches Spirit LM Model for More Natural Sounding Speeches

Today we released Meta Spirit LM — our first open source multimodal language model that freely mixes text and speech.

Many existing AI voice experiences today use ASR to techniques to process speech before synthesizing with an LLM to generate text — but these approaches… pic.twitter.com/gMpTQVq0nE

— AI at Meta (@AIatMeta) October 18, 2024

(SocialLY brings you all the latest breaking news, viral trends and information from social media world, including Twitter (X), Instagram and Youtube. The above post is embeded directly from the user's social media account and LatestLY Staff may not have modified or edited the content body. The views and facts appearing in the social media post do not reflect the opinions of LatestLY, also LatestLY does not assume any responsibility or liability for the same.)

Quickly

Quickly